After hearing rumors that the CUDA Toolkit we’re using for Triton might not work well with the new NVidia 10-series cards, we ordered a NVidia GTX1080 for the office. You know, in the name of science.

My god, this thing is amazing.

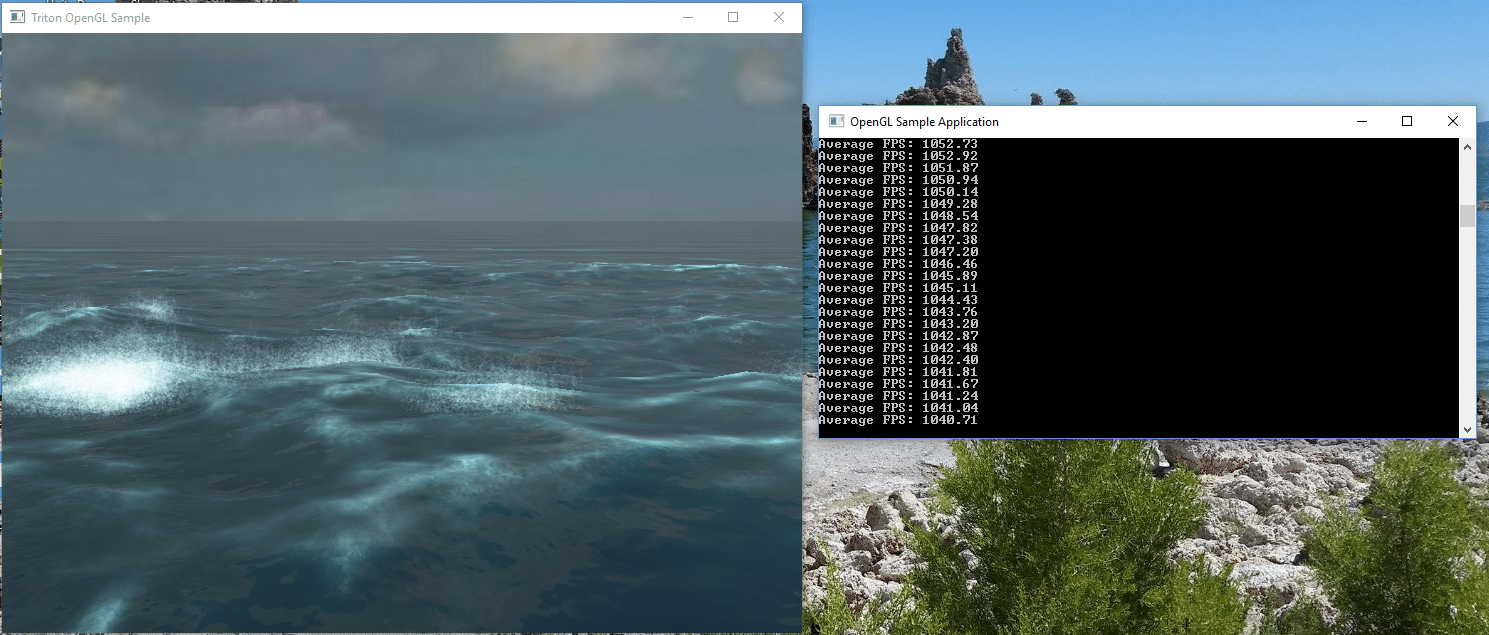

First of all, Triton seems to work just fine with the 1080. We’re using the latest NVidia driver (372.70) and the latest Triton Ocean SDK (3.71), and it runs like a champ.

In fact, it’s more than a champ. For the first time, I’m seeing Triton render its 3D waves in our sample application at over 1000 frames per second. See the image above for proof! With a GTX970, the most we ever saw was in the 700’s.

SilverLining also benefits from the GTX1080’s increased fill rate; the depth complexity of our clouds makes that especially important.

Of course, many of you are going to eat up these performance gains by moving to higher 4K resolutions at the same time. But for now, I’m still trying to pick my jaw up from the floor seeing Triton simulate its thousands of physically-realistic 3D waves over 1000 times per second on consumer hardware! It’s awesome to see.

If you’re on the fence about upgrading your simulation system to the new 10-series cards from NVidia – my opinion is that it’s likely worth getting one to test with.

Oh, and if anyone wants to buy a used GTX970 card, let me know…